Continue reading

Author: JeGX

How To Linearize the Depth Value

Here is a GLSL code snippet to convert the exponential depth to a linear value:

float f=1000.0;

float n = 0.1;

float z = (2 * n) / (f + n - texture2D( texture0, texCoord ).x * (f - n));

where:

– f = camera far plane

– n = camera near plane

– texture0 = depth map.

The Art of Texturing in GLSL is Now a Resource of OpenGL.org

The tutorial The Art of Texturing Using the OpenGL Shading Language has been included in OpenGL.org website in OpenGL API OpenGL Shading Language Sample Code & Tutorials section. Rather cool… 😉

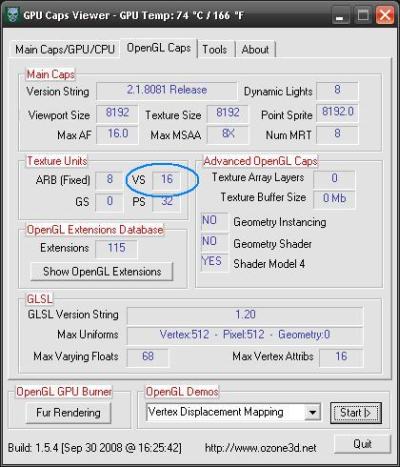

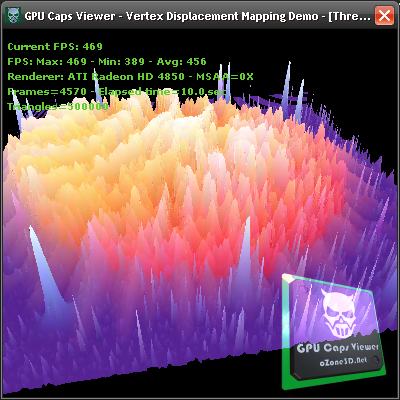

Vertex Displacement Mapping in GLSL Now Available on Radeon!

As I said in this news, the release of Catalyst 8.10 BETA comes with a nice bugfix: vertex texture fetching is now operational on Radeon (at least on my Radeon HD 4850). From 2 or 3 months, Catalyst makes it possible to fetch texture from inside a vertex shader. You can see with GPU Caps Viewer how many texture units are exposed in a vertex shader for your Radeon:

But so far, vertex texture fetching in GLSL didn’t work due to a bug in the driver. But now this is an old story, since VTF works well. For more details about vertex displacement mapping, you can read this rather old (2 years!) tutorial: Vertex Displacement Mapping using GLSL.

This very cool news makes me want to create a new benchmark based on VTF!

I’ve only tested the XP version of Catalyst 8.10. If someone has tested the Vista version, feel free to post a comment…

Next step for ATI driver team: enable geometry texture fetching: allows texture fetching inside a geometry shader…

See you soon!

PhysX FluidMark Video

I found this video about FluidMark:

[youtube 9qnX7d-S1l0]

It’s true, FluidMark is really boring. I’ll try to do better for the next benchmarks 😉

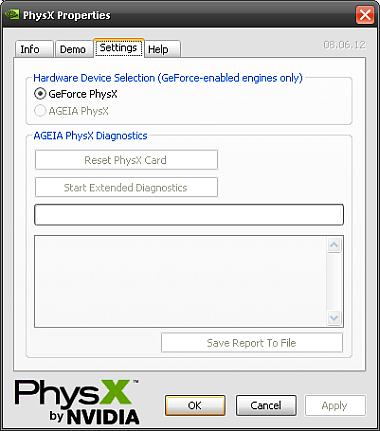

PhysX Control Panel

I don’t know how but I can no longer start .cpl files. CPL files are also called control panel applet and can be started directly in command line. You can find more information about cpl file here.

What I wanted to do is to start PhysX.cpl to display PhysX Properties. After some searches, I found the solution. Just enter control followed by the cpl file in the run box of Windows:

control PhysX.cpl

and you should get this:

Depth of Field

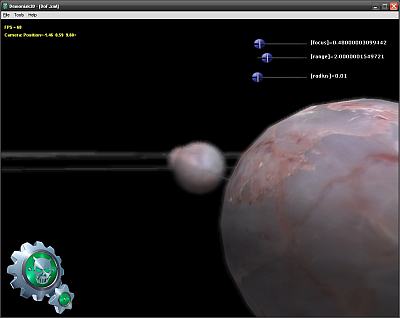

Ces derniers jours j’ai fait quelques tests de Depth Of Field (DoF ou Profondeur de Champ) avec le nouveau Demoniak3D mais je ne suis pas satisfait du resultat car j’ai encore quelques petits soucis pour controler le focus (la zone du champ de vision qui est nette). Le Depth of field est fait dans une étape de post processing et utilise comme seules entrées la texture de couleur de la scene (scene map) et celle de la profondeur (depth map). Cet algo n’utilise pas les MRT (multiple render targets). Voilà un petit aperçu:

Je vais continuer mes expérimentations jusqu’à obtenir un DoF opérationnel et ajouter la gestion des MRT (les MRT me permettront d’explorer un autre effet bien sympa: le SSAO…).

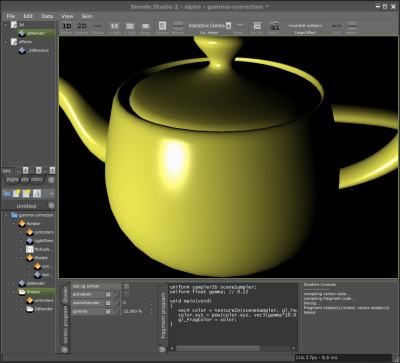

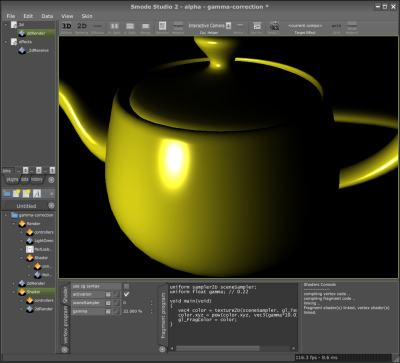

Gamma Correction

I’ve coded today a small gamma correction filter in Smode. I’ll talk about gamma correction a little bit more very soon with a Demoniak3D demo. Here is the result on a simple scene: a teapot lit with a Phong shader.

The following image shows the rendering of the scene done in the usual manner, I mean without gamma correction:

and now the same scene gamma-corrected (factor 2.2):

Multithreaded Build with Visual Studio 2005

Under Visual Studio 2005 (VC8), you can enable the multithreaded build of projects. This great feature makes it possible to use several CPUs to build your projects. This is a per-project setting and it’s done in command line: /MPx where x is the number of cores you want to use. Example: /MP2 to use 2 CPUs if you have (like me) a core2duo.

I’ve done some tests with Demoniak3D:

– Demoniak3D (default): Build Time 0:33

– Demoniak3D (/MP2): Build Time 0:15

Great boost in productivity of large projects!

Alarmes de Kaspersky avec FurMark

Certains utilisateurs ont rapporté que FurMark 1.4.0 est infecté par le cheval de troie Trojan-Downloader.Win32.Agent.vpx. J’ai fait ce matin (car c’est vrai que j’ai rien d’autre à faire de mes journée – merci kaspersky…) le scan avec la dernière version de Kaspersky et la toute dernière mise à jour de leur base de données. Kaspersky n’a trouvé aucune menace ou cheval de troie dans l’installer de FurMark ni dans son répertoire d’installation. FurMark est sain. MajorGeeks et Softpedia sont de bonnes preuves de la bonne santé de FurMark.

J’ai fait quelques recherches sur le Net et j’ai trouvé d’autres utilisateurs de Kaspersky qui ont le même problème. Je pense que le problème vient d’InnoSetup (le programme d’installation utilisé pour créer FurMark_Setup.exe) et dépend de la version de la base de données de Kaspersky. Donc si vous avez l’antivirus Kaspersky, soyez sur d’être à jour.

J’ai envoyé le FurMark_Setup.exe au Kaspersky Lab et je viens de recevoir la réponse du Virus Analyst: “I suppose it WAS a false alarm, and it has been already fixed.”

Donc tout est ok.

Some users have reported that FurMark 1.4.0 has the Trojan-Downloader.Win32.Agent.vpx. I did this morning the scan with the latest version of Kaspersky and the very latest version of their database. Kaspersky hasn’t found any threat or trojan in FurMark setup installer nor in FurMark root directory. FurMark is clean. MajorGeeks and Softpedia are very good proofs of FurMark’s cleanness.

I did some searches over the Net and I found that others users have some false alarms. I guess the problem comes from the InnoSetup (the utility used to create FurMark_Setup.exe) and depends of the version of Kaspersky’s database. So if you have Kaspersky antivirus, be sure to update it with the latest database.

I sent FurMark_Setup.exe to Kaspersky Lab and I just receive the reply from the Virus Analyst:

“I suppose it WAS a false alarm, and it has been already fixed.”

This time everything is ok!

Saturate function in GLSL

During the conversion of shaders written in Cg/HLSL, we often find the saturate() function. This function is not valid in GLSL even though on NVIDIA, the GLSL compiler accepts it (do not forget that NVIDIA’s GLSL compiler is based on Cg compiler). But ATI’s GLSL compiler will reject saturate() with a nice error. This function allows to limit the value of a variable to the range [0.0 – 1.0]. In GLSL, there is a simple manner to do the same thing: clamp().

Cg code:

float3 result = saturate(texCol0.rgb - Density*(texCol1.rgb));

GLSL equivalent:

vec3 result = clamp(texCol0.rgb - Density*(texCol1.rgb), 0.0, 1.0);

BTW, don’t forget all float4, float3 and float2 which correct syntax in GLSL is vec4, vec3 and vec2.

Lors de la conversion de shaders écrits en Cg/HLSL, on trouve souvent la fonction saturate(). Cette fonction n’est pas valide en GLSL bien que sur les NVIDIA le compilateur l’accepte (n’oublions pas que le compilateur GLSL de NVIDIA repose sur le compilateur Cg). Mais le compilateur GLSL d’ATI générera une belle erreur à la vue de saturate(). Cette fonction sert à limité la valeur d’une variable entre 0.0 et 1.0. En GLSL il y un moyen tout simple de faire la même chose: clamp().

Code Cg:

float3 result = saturate(texCol0.rgb - Density*(texCol1.rgb));

Equivalent GLSL:

vec3 result = clamp(texCol0.rgb - Density*(texCol1.rgb), 0.0, 1.0);

Au passage lors des conversions, n’oubliez pas les float4, float3 et float2 qui s’écrivent en GLSL en vec4, vec3 et vec2.

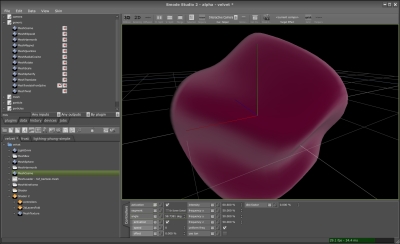

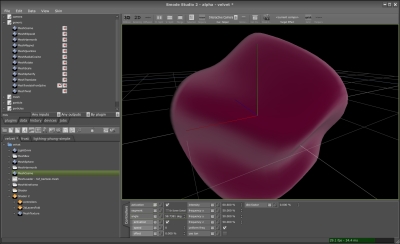

Velvet Shader Preview

Dès que la prochaine release de Demoniak3D, la 1.24.0 (le numéro de version sera peut être le 1.30.0 vu le nombre de modifs), je releaserai la démo du velour avec son beau shader GLSL. Et si je tarde un peu, n’hésitez pas à me poster un petit message pour me rappeler à l’ordre.

[/French]

[English]

Are you ready for a small velvet GLSL shader? Here’s one, at least a preview of the one I’ve just coded for a demo with Smode. Smode… a software dedicated to create… demos! And the cool thing, is that Smode demos will be also available for Demoniak3D. Don’t look for Smode, it’s not available for you, public… Only few people on this planet are enough lucky to play with. But if you really want to touch it, just drop me an email…

As soon as the next release of Demoniak3D, the 1.24.0 (or better the 1.30.0 because of the huge amount of changes), will be ok, I’ll put online the velvet demo with its nice GLSL shader. And if I’m late, don’t hesitate to post a small message to wake me up!

[/English]

GLSL support in Intel graphics drivers

A user from oZone3D.Net forum asked me some info about the GLSL support of Intel graphics chips. It’s wellknown (sorry Intel) that Intel has a bad OpenGL support in its Windows drivers and even if Intel’s graphics drivers support OpenGL 1.5, there is still a lack of GLSL support. We can’t find the GL_ARB_shading_language_100 extension (this extension means the graphics driver supports the OpenGL shading language) and this extension should be supported by any OpenGL 1.5 compliant graphics driver. You can use GPU Caps Viewer to check for the avaibility of GL_ARB_shading_language_100 (in OpenGL Caps tab).

Here is an example of a Intel’s graphics driver that support openGL 1.5 without supporting GLSL:

– Mobile IntelR 965 Express Chipset Family

For more examples, look at users’s submissions here: www.ozone3d.net/gpu/db/

Okay this is my analysis, but what is the Intel point of view? Here is the answer:

– x3100 & OpenGL Shader (GLSL) thread

– Intel’s answer

I think GLSL support with Windows is not a priority for Intel…

GLSL float to RGBA8 encoder

Packing a [0-1] float value into a 4D vector where each component will be a 8-bits integer:

vec4 packFloatToVec4i(const float value)

{

const vec4 bitSh = vec4(256.0*256.0*256.0, 256.0*256.0, 256.0, 1.0);

const vec4 bitMsk = vec4(0.0, 1.0/256.0, 1.0/256.0, 1.0/256.0);

vec4 res = fract(value * bitSh);

res -= res.xxyz * bitMsk;

return res;

}

Unpacking a [0-1] float value from a 4D vector where each component was a 8-bits integer:

float unpackFloatFromVec4i(const vec4 value)

{

const vec4 bitSh = vec4(1.0/(256.0*256.0*256.0), 1.0/(256.0*256.0), 1.0/256.0, 1.0);

return(dot(value, bitSh));

}

Source of these codes: Gamedev forums

Better, smaller and faster random number generator

I found this cool random generator on rgba’s website. rgba is a wellknown demoscene group specialized in 4k prods. This random generator is used in their prods:

static unsigned int mirand = 1;

float sfrand( void )

{

unsigned int a;

mirand *= 16807;

a = (mirand&0x007fffff) | 0x40000000;

return( *((float*)&a) - 3.0f );

}

It produces values in the range [-1.0; 1.0].

You can find the making of this random gen HERE.

Nouvelles de GPU Caps Viewer

Small Log System

Here is a small piece of code that can be useful if you need to quickly generate traces (or log) in your apps:

class cLog

{

public:

cLog(char *logfile){};

static void trace(const char *s)

{ if(s) log << s << std::endl; };

static std::ofstream log;

};

std::ofstream cLog::log("c:\\app_log.txt");

Just use it as follows:

cLog::trace("this is a log");

cLog::trace("this is a second trace");

Geeks3D.com

Ce site de news se trouve ici: Geeks3D.com.

Pourquoi ce site? Tout simplement parceque c’est une excellente façon pour moi de faire de la veille technologique dans le domaine de la 3D. J’ai depuis longtemps fait des news: d’abord sur le site oZone3D.Net puis dans le blog que vous êtes en train de lire. Mais cela commençait

à devenir un peu confu: news postées sur oZone3D.Net, news postées sur l’Infamous Lab, en fait je ne savais plus trop où poster les news. La solution était donc toute simple: créer un nouveau site rien que pour les news!

En un mot: Geeks3D.com pour les news 3D et le JeGX’s Infamous Lab pour mes demos, tests et autres délires plus perso.

Donc voilà, si vous avez des actus relatives au monde de la 3d que vous voulez faire connaitre, envoyez-les moi (jegx at ozone3d.net), je me ferai un plaisir de les publier sur Geeks3D.

[/French]

[English]

A small post to say I have set up a new website dedicated to the Latest of the world of 3D: graphics cards, OpenGL and Direct3D programming, 3d softwares, demoscene and all other cool things I forget.

This website is here: Geeks3D.com.

Why this new website? Simply because it’s perfect way for me to keep pace with the latest in 3d.

I’m in the news for a while: first in the oZone3D.Net website, then in the Infamous Lab (actually oZone3D.Net+Infamous Lab) but now it was getting a little confused to know where posting the news. The solution was very simple: starting a new website only for the news!

In a word: Geeks3D.com for 3D news and JeGX’s Infamous Lab for my demos and tests/reviews.

By the way, if you have some cool news that match Geeks3D you want to spread, feel free to send them (jegx at ozone3d.net), I would be glad to publish them.

[/English]

NVIDIA’s David Kirk Interview on CUDA, CPUs and GPUs

David Kirk, Nvidia’s Chief Scientist, interviewed by the guys at bit-tech.net.

Read the full interview HERE.

Here are some snippets of this 8-page interview:

– page1

“Kirk’s role within Nvidia sounds many times simpler than it actually is: he oversees technology progression and he is responsible for creating the next generation of graphics. He’s not just working on tomorrow’s technology, but he’s also working on what’s coming out the day after that, too.”

“I think that if you look at any kind of computational problem that has a lot of parallelism and a lot of data, the GPU is an architecture that is better suited than that. It’s possible that you could make the CPUs more GPU-like, but then you run the risk of them being less good at what they’re good at now”

“The reason for that is because GPUs and CPUs are very different. If you built a hybrid of the two, it would do both kinds of tasks poorly instead of doing both well,”

– page 2:

“Nvidia has talked about hardware support for double precision in the past—especially when Tesla launched—but there are no shipping GPUs supporting it in hardware yet”

“our next products will support double precision.”

“David talked about expanding CUDA other hardware vendors, and the fact that this is going to require them to implement support for C.”

“current ATI hardware cannot run C code, so the question is: has Nvidia talked with competitors (like ATI) about running C on their hardware?”

– page 3:

“It amazes me that people adopted Cell [the pseudo eight-core processor used in the PS3] because they needed to run things several times faster. GPUs are hundreds of times faster so really if the argument was right then, it’s really right now.”

De Lausanne à Paris

pour ça:

Quelque chose me dit que ma vie parisienne ne sera pas très longue…

Mais ne désespérons pas… il y quand même de belles choses à Paris. Je vais donc en profiter pour ajouter une nouvelle catégorie à ce blog -: Paris -: et poster régulièrement des photos de différents endroits de Panam… Pour ce premier billet sur Paris voilà quelques photos sans ordre particulier: