Continue reading

News en vrac / WP sandbox

I must confess the SLI is a really cool technology. I assembled a SLI station based on the new nVidia Geforce 7600 GS series. This is a little graphic card compared to the high-end ones like the 7900GTX or the big monster 7950 GX2 (which in passing is really quiet in spite of its two GPUs and ventirads): the price of a 7600GS is about 100 euros, the price of a 7900GTX is about 400 euros and the cost of the 7950 GX2 climbs up to 500 euros! In terms of shader pipelines, the 7600 GS has 5 vertex pipes and 12 pixel pipes, the 7900 GTX has 8 vertex pipes / 24 pixel pipes and the GX2 has 48 pixel pipes and 16 vertex pipes. And so what? A 7600 GS SLI system offers almost the same level of gpu power than a 7800/7900 for a half price. I’ve verified this fact with the latest version of the soft shadows benchmark. With one 7600 GS (single GPU mode) the score is 510 o3Marks. With the two 7600 GS in SLI mode, the score jumps to 1004 o3Marks. The 7950 GX2’s score in single GPU is about 1200 o3Marks. Ok my bench is a small bench, you’re right. What about 3dmrk06? The 3DMark06 score is 4115 for the 7600 GS SLI system. This score is close to the ones got with a non-overclocked gf7800/7900.

Little remark: my 7600 GS are not overclocked.

Conlusion: for 200 euros you can have an excellent graphic system which is also silent thanks to the 7600GS passive cooler!

A new version of the Soft Shadows Benchmark is available but this time using uniform arrays to pass the blurring kernel to the pixel shader. On nVidia boards, there is a little increase of speed (1 or 2 fps). On my X700… black screen… Houston, we’ve got a problem… This is with Catalyst 6.6. Okay I try the very latest Catalyst, the 6.7. Bad idea, it’s worse! Both versions (with and without uniform arrays) do not work anymore with C6.7. Back to C6.6. That really sucks! :thumbdown:

But I’ve just received a feedback telling me that the uniform arrays version works fine on an ATI X1600 Pro with C6.5. :thumbup:

Okay, there is certainly a problem with the X*** series and uniform arrays.

I’ve just finihed to implement soft-shadows in the new oZone3D Engine. And I must say that soft shadows bring a huge amount of realism and credibility to 3d scenes. See for yourself:

The oZone3D tech demo is available here: Soft Shadows Demo

You can consider this demo as a little benchmark. Just start the oZone3D_SoftShadows_Benchmark.exe and look at the FPS in the title bar. With my current devstation I got the following score:

PC1: AMD64 3500+ / 1024M DDR400 / Radeon X700Pro 128M Catalyst 6.6 / WinXpsp2: 5 FPS :thumbdown:

Soft shadows are very GPU consuming but they are the future of 3D! So to make the most of soft shadows, update your graphics card!

I’ve just found in the super paper of ATI, called “ATI OpenGL Programming and Optimization Guide” that all ATI GPUs from the R300 (Radeon 9700) to the latest R580 (Radeon X1900) only support NEAREST (and the mipmap version) filtering for depth map. That explains the previous results. So if you want a nVidia-like depth map filtering, you have to code the filtering yourself in the pixel shader. Okay, this answer suits me!

Really ATI has some problems with OpenGL. Now I’m working on soft shadows and my tmp devstation has a Radeon X700 (not the top-notch I know but an enough powerful CG). With my X700 (Catalyst 6.6) the soft shadow edges are rendered as follows:

And on my second CG, a nVidia 6600gt (forceware 91.31), the soft shadows are as follows:

The GLSL shaders are the same, a 5×5 bluring kernel, with a shadow map (or depth map as you want) of 1024×1024 (via a FBO) with a linear filtering. Now if I set the nearest filtering mode, I get the following results for the X700:

and for the 6600gt:

It seems as if the Radeon GPU has a bug in the filtering module when the gpu has to apply a linear filter on a depth map. Very strange.

I’m not satisfied by this explanation but it’s the only I see for the moment.

This kind of problem shows how it’s important for a graphics developer to have at least 2 workstations, one with a nVidia board and the other with an ATI CG. I tell you, realtime 3D is made of blood, sweat and screams! :winkhappy:

It’s nice to come back to code!

I’m currently working on a new and simple framework for my OpenGL experimentations before implementing the algorithms in the oZone3D Engine . RaptorGL is a little bit too heavy for simple tests so for the moment I drop it. This new framework I called XPGL (eXPerimental Graphics Library), allows me to quickly test the new algos I’m working on. Every time I have to code a little but fully operational 3D demo in c++/opengl, I spend lot of time for a small result. In these moments, I say to myself that Hyperion is a very cool tool.

Okay, let’s see a weird behavour of radeon gpu. At the moment, my graphics controller is a Radeon X700. With the latest catalyst drivers (6.6), this graphics board should be an OpenGL 2.0 compliant CG. A little check to the GL_VERSION tells me the X700 is GL2 compliant. Then the X700 should handle non power of two texture since this feature is part of the OpenGL 2.0 core. But the GL_ARB_texture_non_power_of_two string is not found in the GL_EXTENSIONS. Maybe ATI does not mention the extensions that are part of the core. Anyways, I loaded a 600×445 npot-texture on a mesh plane and the X700 seems to support this texture. But with a ridiculous fps of 1… Software codepath? I think so! So I decided to load the same texture with power of two dims (512×512) and the fps is become decent again. With my gf6600gt (with the forceware 91.31) I never noticed this effect/bug because the GL2-support is better and nVidia gpus correctly support non power of two texture. You can download the demo with the npot and pot texture (the one mapped onto the mesh plane) hereafter and do the test for yourself. Feel free to drop me a feedback if you wish.

But keep in mind that graphics hardware is optimized for POT textures. Try to use POT textures in order to maximize your chances to see your demo running everywhere.

Just for fun (and for benchmarking too), I’ve done a compilation of the latest Ogre3D engine (v1.2.1).

For the sake of the test I used vc2005 and the two following files available on www.ogre3d.org:

– OGRE 1.2.1 Source for Windows

– Dependencies 1.2.0 for Visual C++ 2005(8.0)

Unpack both archives and put the content of Dependencies archive into ogre folder. Now you’re ready to load the ogre_vc8.sln solution. Once done, you have to enable the compiler timer with:

[i]Tools->Options->Projects->VC++ Build and setting Build Timing to Yes[/i].

Okay, everything is ready for the compilation of the OgreMain project.

With my workstation (p4 3.6GHz, 2Go DDR2 533, hdd WD 200Go SATA1), the compilation and linking took: Build Time 12:04 (read 12 min 04 sec!) :raspberry:

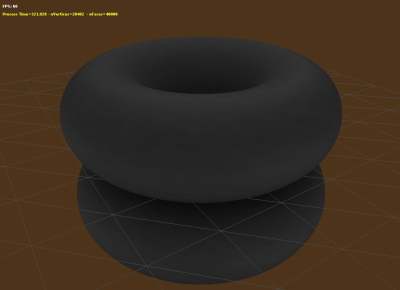

I’m currently working on a new algorithm for the ambient occlusion generator. The basic idea comes from smash, the main coder of Fairlight, a famous demoscene group (thank you mate!). My old AmbOccGen was (is still) really slow: calculating per-vertex AO term for a 40000-polys object with 1000 samplers could take many hours and even more (days!). The following image shows a 40,000 polys scene (each torus has 20,000 polys) and the new alogrithm took only 5 minutes to compute the ambient occlusion for 8192 samples! Really cool and I know I can do better…

I’ll released an end-user tool when the new version of oZone3D will be ready. The new version of oZone3D is now a top priority task (and a particularly huge task…).

The famous Blue Screen of Death is back:

It’s a long time I didn’t see it. I’m working on VBO in a new eXPerimental 3D engine and I

certainly must have passed a wrong face offset to the index buffer. Little bug in my side, no doubt. Bug in NVIDIA Forceware side: I don’t know how the drivers have to behave when wrong parameters are sent to them, but I don’t think they have to freeze your devstation!

More on this bug later…

I’ve just received an email from an user saying that he was’nt able to run the demos of the Vertex Displacement Mapping Tutorial on his brand new Radeon X1900XTX. VTF or Vertex Texture Fetching is a cool feature of high-end graphics chipsets and it’s part of Shader Model 3.0. The X1900 series is based on the R500 chipset (R580) that is a SM3.0 complient GPU. But in OpenGL side and especially in GLSL, VTF is not supported. The OpenGL query done with GL_MAX_VERTEX_TEXTURE_IMAGE_UNITS_ARB always returns 0. That means that no texture units are available in the vertex shader.

ATI confirms this fact in one of its whitepapers shipped with the ATI SDK (ATI OpenGL Programming and Optimization Guide.pdf). At the page 11, we can read this: [i]”All ATI graphics HW have a few items that deserve special consideration when using GLSL. The first major item of note is the absence of vertex texture units. This means that vertex texturing is never available, and all shaders attempting to use texture functions in the vertex shader will fail to link.”[/i]. I know, this is a rude reality. The R580 GPU is really powerful and it’s a pity that ATI does not support VTF in his chipsets. I don’t know how the R580 behaves in D3D side but I can suppose the GPU has the same limitations. VTF is currently supported by Geforce chipset from 6600 to 7900. Conclusion: if you wish to play with VTF, use a nVidia board.

Maybe, all these problems will be solved with the SM4.0. I hope! :winkhappy: